SEO involves making certain changes to your website design and content that makes your site more attractive to a search engine. There are two types of SEO first is White Hat SEO and the second one is Back hat SEO. We should follow white Hat SEO practice To know more read my Post Comparison of White Hat SEO Vs Black Hat SEO.

A robots.txt file is the heart of SEO because it gives instruction to search engine which page of your website get listed in the search result and which page gets excluded. This is used mainly to avoid overloading your site with requests. To keep a web page out of Google, you should use noindex directives, or password-protect your page.

How to Optimize Robots txt file -:

Optimum Setting for robots txt file.

User-Agent: *

Allow: /wp-content/uploads/

Disallow: /wp-content/plugins/

Disallow: /wp-admin/

Sitemap: https://example.com/sitemap_index.xml

In the above robots.txt example, I have allowed search engines to crawl and index files in My WordPress uploads folder. After that, I have disallowed search bots from crawling and indexing plugins and WordPress admin folders. Using your robots.txt file, you can disallow crawling of login pages, contact forms, shopping carts, and other pages whose sole functionality is something that a crawler can’t perform

Lastly, I have provided the URL of our XML sitemap. In my robot.txt file User-Agent means that the robots.txt file applies to all web robots that visit the site. It is applicable for Google, Bing, Yandex etc. If you see above robot.txt file I have disallowed certain things, you may think that why anyone would want to stop web robots from visiting their site.

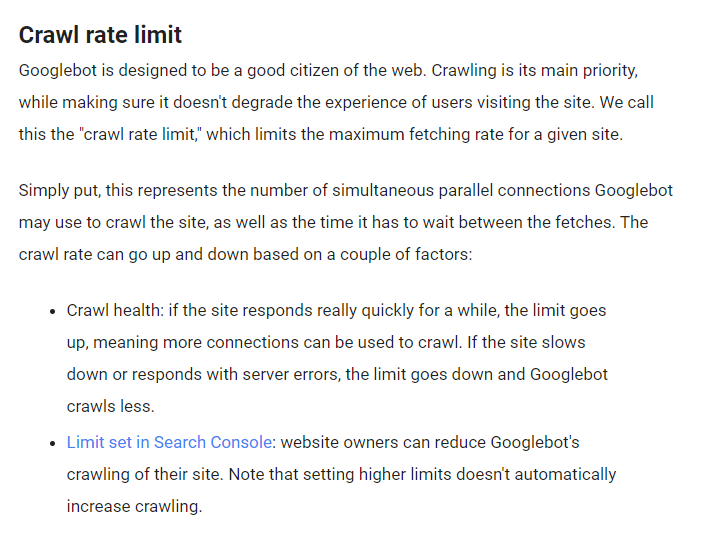

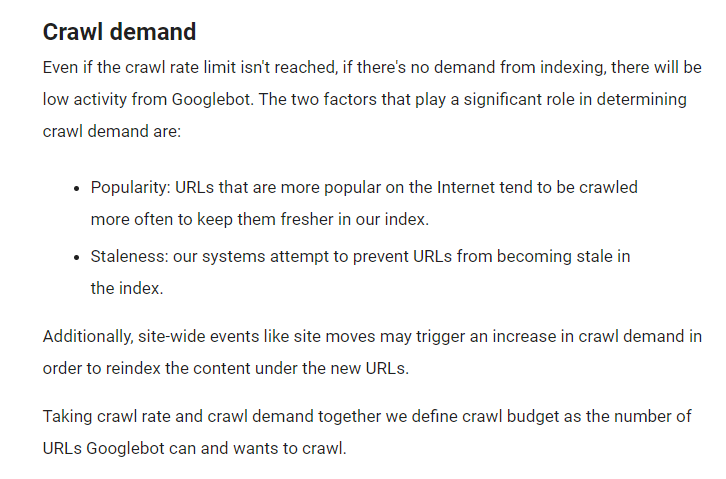

If your website has lots of pages a web robot will take lots of time to crawl your website which will result in a negative impact on your SEO Ranking. Googlebot has a limit on crawl limit and crawls demand. Explanation of Crawl limit and crawl demand are as follow.

Crawl budge of your website is limited. low-value-add URLs can negatively affect a site’s crawling and indexing. Google Webmaster found that the low-value-add URLs fall into these categories, in order of significance: which may cause a significant delay in discovering great content on a site.

[adinserter block=”5″]

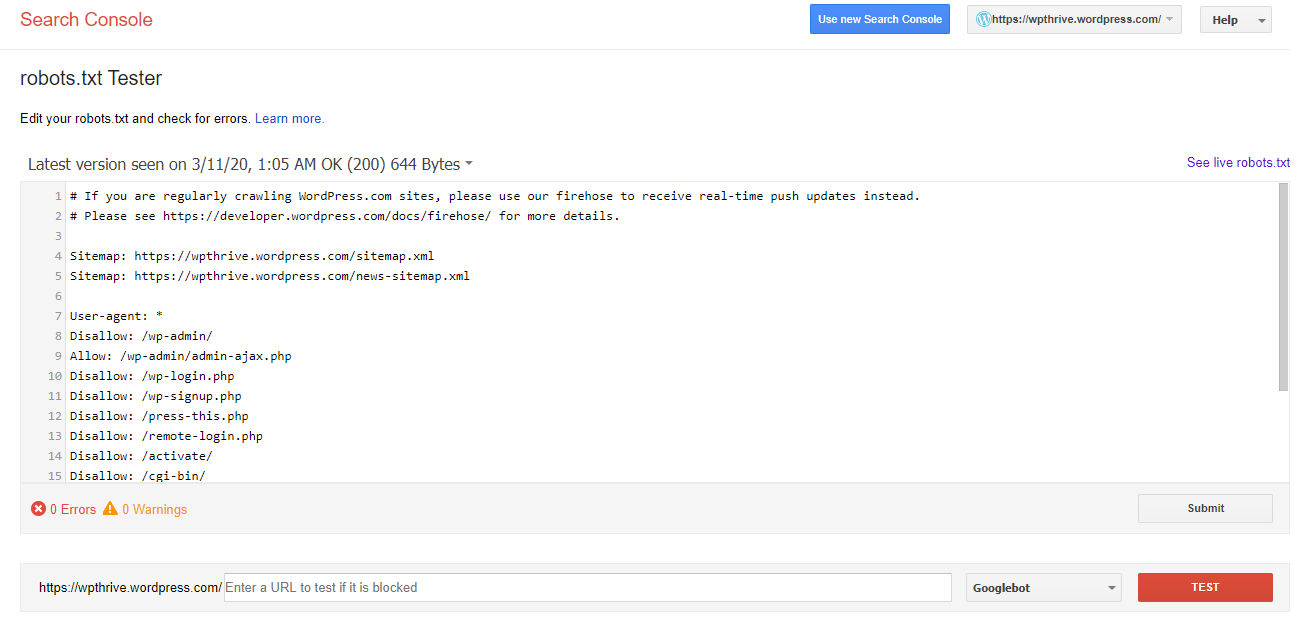

How to Test Robots txt file for Better SEO Ranking-:

- Head over to Google Search Console Testing Tool and add the property.

- After adding the property you will get option to Test.

Conclusion-: Robot.txt file is the heart of your Blog SEO. It is very important to configure your Robot.txt file in a proper way, a bad configuration may hurt your SEO ranking. If you do not find any error or you want to add some rule you can do that and submit it to google.

Thanks for reading…“Pardon my grammar, English is not my native tongue.”

If you like my work, Please Share on Social Media! You can Follow WP knol on Facebook, Twitter, Pinterest and YouTube for latest updates. You may Subscribe to WP Knol Newsletter to get latest updates via Email. You May also Continue Reading my Recent Posts Which Might Interest You.